Here at Kerika, we often get asked how we do Scrum as a distributed team.

Here’s the model we have evolved, which works for us mainly because we are the very essence of a distributed Agile team: we have people working together on the same product from locations that are 10,000 miles apart!

And this means that we are the most enthusiastic consumers of our products: we use Kerika to manage every part of our business and we only build what we would ourselves love to use.

Here’s the basic outline of our Scrum model:

Each Sprint is 2 weeks long: that that works well for us; other folks might find that 3 weeks or 4 weeks i better. Pick what works for you.

Each Sprint begins with Sprint Planning, where the Scrum Team gets together with the Product Owner to decide which cards will be pull from our main Product Backlog into the Sprint Backlog.

Each Sprint is organized as a separate Scrum Board: this makes it really easy for us to concentrate upon needs to get delivered in that particular Sprint, without getting distracted by what was done in the past or what remains to be done.

And Kerika makes it really easy to pull cards (literally!) from the Backlog onto a Scrum Board, and then hide the Backlog from view so it doesn’t distract the Team while the Sprint is underway.

Half-way the Sprint, at the end of the first week, we do a gut-check: does the Sprint look like it is going reasonably well? We don’t ask if it is going perfectly: almost no Sprint does; what we are looking for is any indication that the Sprint is going to severely under-deliver in terms of the Team’s commitments to the Product Owner.

We could do these gut-checks every day during our Daily Standups, but in the first part of a Sprint cycle these can often give us false positives: it’s easy to tell early on if a Sprint is going to be disastrous, but it’s hard to tell for sure that it is actually going to end well. But about midway through the Sprint we start to have a more reliable sense for how things may turn out.

In keeping with the Scrum model, our goal is to complete a potentially shippable set of features and bug fixes with each Sprint, although this doesn’t necessarily mean that we will always ship what gets built after each Sprint. (More on that later.)

For each feature or bug, however large or small, we make sure that we have design and testing baked into the process:

- An analysis document is prepared and attached to the card, either as a Google Doc or as Box document. (We had been using Kerika+Google exclusively for years, but have recently switched to Kerika+Box since we completed our Box integration.)

- The document is often just a few paragraphs long, because we always take cards representing large features (or other big work items) and break them up into smaller cards, so that no card is likely to take more than a day’s work. Kerika makes it really easy to link cards together, so it’s easy to trace work across multiple cards.

- For bugs, the attached document describes the expected behavior, the actual behavior, and the root cause analysis. Very frequently, screenshots showing the bugs are attached to the cards.

- For new features, several documents may be attached, all quite small: there may be a high-level analysis document and a separate low-level design document.

- For all features and bugs, we do test planning at the time we take on the work: for back-end (server) work we rely primarily on JUnit for writing automated tests; for front-end (UI) work we have found that automated testing is not very cost-effective, and instead rely more on manual testing. The key is to be as “test-driven” in our development as possible.

There are several benefits from doing formal planning, which some folks seem to view as being antithetical to an Agile model:

- It helps find holes in the original requirements or UI design: good technical analysis finds all the edge cases that are overlooked when a new feature is being conceptualized.

- It helps ensure that requirements are properly interpreted by the Team: the back-and-forth of analysis and reviewing the requirement helps ensure that the Product Owner and the Team are in synch on what needs to get done, which is especially important for new features, of course, but can also be important to understand the severity and priority of bugs.

- It deliberately slows down the development pace to the “true” pace, by ensuring that time and effort for testing, especially the development of automated tests, is properly accounted for. Without this, it’s easy for a team to quickly hack new features, which is great at first but leads to unmaintainable and unstable code very soon.

At the end of the 2-week cycle, the Team prepares to end the Sprint…

We like to talk about Sprints as “buses”: a bus comes by on a regular schedule, and if you are ready and waiting at the bus stop, you can get on the bus.

But if you are not ready when the bus comes along, you are going to have to wait for the next bus, which thankfully does come by on a regular 2-week schedule.

This metaphor helps the Team understand that Sprints are time-boxed, not feature-boxed: in other words, at the end of every 2 weeks a Sprint will end, regardless of whether a feature is complete or not. If the feature is complete, it catches the bus; otherwise it will have to wait for the next bus.

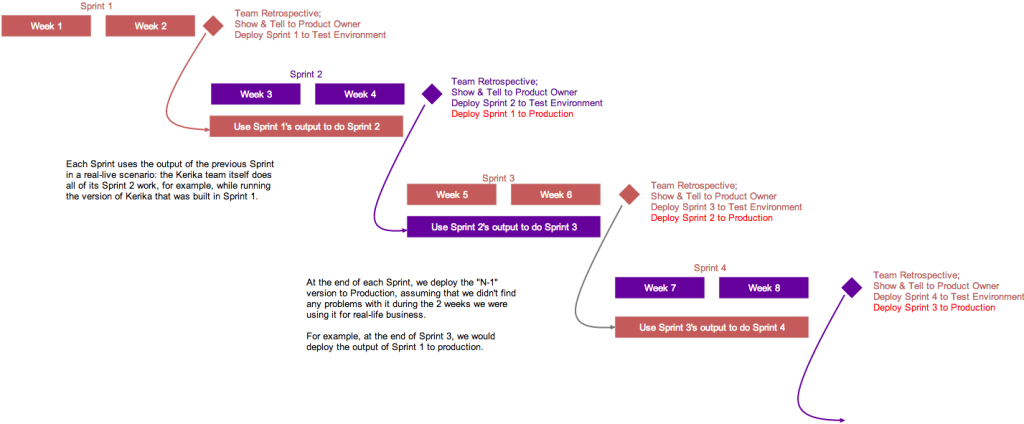

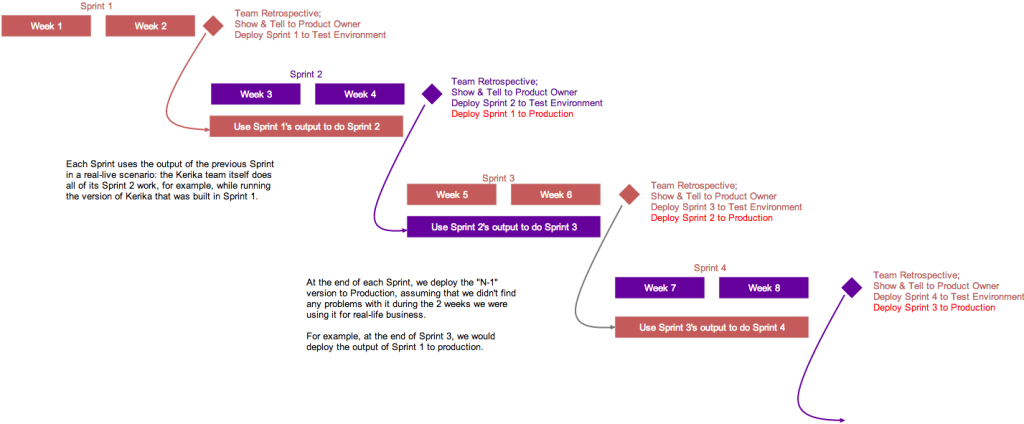

And here’s where the Kerika team differs from many other Scrum teams, particularly those that don’t consume their own products:

- At the end of each Sprint, we do the normal Sprint Retrospective and Show & Tell for the Product Owner.

- But, we also then take the output of the Sprint and deploy it to our test environment.

- Our test environment is the one we actually use on a daily basis: we don’t use the production environment as often, preferring to risk all of our work by taking the latest/greatest version of the software on the test environment.

This forces us to use our newest software for real: for actual business use, which is a much higher bar to pass than any ordinary testing or QA, because actual business use places a higher premium on usability than regular QA can achieve.

(And, in fact, there have been instances where we have built features that passed testing, but were rejected by the team as unusable and never released.)

This is illustrated above: the version of Kerika that’s built in Sprint 1 is used by the team to work on Sprint 2.

This is where the rubber meets the road: the Kerika Team has to build Sprint 2, while using what was built in the previous Sprint. If it isn’t good enough, it gets rejected.

At the end of Sprint 2, we will release the output of Sprint 1 to production. By this time it will have been used in a real sense by the Kerika Team for at least 2 weeks, post regular QA, and we will have a high confidence that the new features and bug fixes are solid and truly usable.

We could summarize our model by saying that our production releases effectively lag our Sprint output by one Sprint, which gives us the change to “eat our own dogfood” before we offer it to anyone else.

You can see the actual Whiteboard project for this process flow here.